/Data Protection in Company

Over the past few years, data security has become a priority topic among business owners. Through the development of technology, more and more sectors are being digitized, not only improving the operation of the company, but also exposing it to attacks from cyber criminals. We can’t provide 100% protection for confidential information, but by putting the right steps in place, we can minimize the risk of a potential leak. As a result, both the company’s good name and budget will not suffer.

In an era that condones employees to use private devices for business purposes, security issues have never been so sensitive. Surveys show that only 40% of working people in Poland consider issues related to protecting the equipment they work on. This poses quite a challenge for owners of businesses, who must ensure not only the security of the data itself, but also systematize regulations in relation to the surveillance of their subordinates’ private devices. We need to realize the consequences that can accompany a data leak – even if we run a small or medium-sized company. Leakage of customers’ private information, caused either by the deliberate actions of external hackers or by an employee who took advantage of an unlucky open Wi-Fi network, can cost our company exorbitant sums of money (leaving aside the risk of possible liability under, for example, data protection regulations).

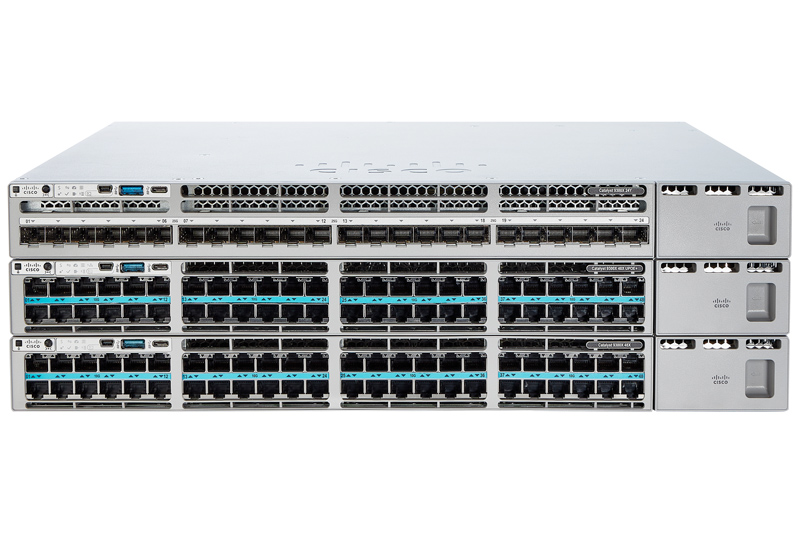

The potential threat may not only come from the network – it also applies to theft or damage to physical equipment. That’s why we should make an effort to ensure that vital equipment for the operation of the company is properly secured, especially against the possibility of outside contact. In terms of data protection, establishing adequate oversight is much more crucial. The basis is choosing the right security system – one that is tailored to our company. It is at this stage that it is crucial to establish a data hierarchy, so that access to the most important information for the company, such as confidential customer data, will be reserved for those with authorizations – that is, employees for whom such knowledge is absolutely necessary to perform their duties. Let’s also ask ourselves an important question – what will we do if somehow this data is lost? If we do not yet know the answer, let’s think as soon as possible about separate a team whose task will be to periodically create backups and properly secure them. This way, in case of an attack and deletion of information or ordinary failure, we will be able to recover the data. The most perfect system will not work if it is not used by competent people. That’s why it’s so important to sensitize the employees themselves to device security issues. Let’s start by making a list of of tasks that all subordinates will have to perform before integrating their device into company operations, and another one describing cyclical procedures (such as updating or frequently changing passwords). Employees’ knowledge will be based on this, while separate training and each time introducing new people to the company’s security routine may be necessary to fully implement the security requirements.

Like any professional solution, a surveillance system for confidential information first requires prudent planning. We do not have to deal with this ourselves – there are companies that professionally deal with assisting companies in implementing security measures. However, we should remember to use common sense in this matter as well: when deciding on the services of specialists, be sure that they really are the best at what they do. In the age of the Internet, we can get opinions on almost any service provider, a good old recommendation by a friendly company will also work. Thanks to all these measures, we will be able to sleep peacefully, and our company – to function without unpleasant surprises.