/What is Cybersecurity?

The rapid pace of technological progress has brought unprecedented opportunities for innovation, communication and efficiency. However, as dependence on technology increases, so does the risk of cyber attacks. Cyber security has become one of the most pressing issues of our time, affecting individuals, companies and governments around the world. The consequences of cyber attacks can range from financial losses to disruption of critical infrastructure and even loss of human life.

In recent years, we have witnessed a number of high-profile cyber attacks, including the WannaCry ransomware attack that affected hundreds of thousands of computers worldwide, the Equifax data breach that exposed the confidential information of millions of people, and the SolarWinds supply chain attack that involved many government agencies and private companies. These incidents underscore the seriousness of the cyber threat situation and the need for effective cybersecurity measures.

The current state of cybersecurity

Despite significant efforts to improve network security, the current state of cybersecurity remains precarious. Cyber attacks are becoming more sophisticated, more frequent and have a growing impact. Cybercriminals are constantly developing new attack methods and exploiting vulnerabilities in software and hardware systems.

Moreover, the COVID-19 pandemic has created new opportunities for cyber attacks. With the rapid shift to remote work and online services, organizations are more vulnerable than ever to cyber attacks. Phishing attacks, ransomware attacks and other forms of cyber attacks have increased during the pandemic.

The most common cyber threats

There are many cyber risks that individuals, companies and governments should be aware of. Here are the most common risks involved:

- Malware – is a type of malware that is designed to damage computer systems or steal sensitive information. Typical types of malware are viruses or Trojans.

- Ransomware – is a type of malware that is designed to extort money by blocking access to files or a computer system until a ransom is paid.

- Phishing – is a type of social engineering attack in which cybercriminals use emails, phone calls or text messages to trick people into divulging sensitive information or clicking on a malicious link.

- DDoS attacks (Distributed Denial of Service) – involve flooding a site or server with traffic, causing it to crash and make it unavailable to users.

- „man-in-the-middle” attacks – these occur when an attacker intercepts and alters communications between two parties in order to steal sensitive information or inject malicious code.

- Zero-day exploits – are vulnerabilities in software or hardware that are unknown to the manufacturer and therefore not patched. Cybercriminals can exploit these vulnerabilities to gain unauthorized access to systems or data.

Cybersecurity challenges

There are several challenges we face in achieving effective cyber security. One of the primary challenges is the shortage of qualified cyber security professionals. This industry is experiencing a significant shortage of qualified professionals, making it difficult for organizations to find and hire qualified experts to protect their systems.

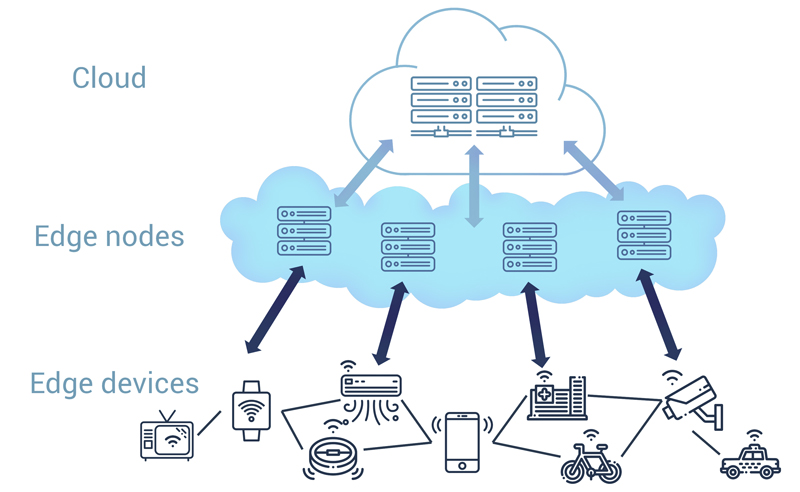

Another challenge is the complexity of modern technology systems. With the proliferation of IoT ("Internet of Things") devices, cloud computing and other emerging technologies, the attack surface has increased significantly, and this makes it more difficult to detect and respond to cyber attacks.

Emerging technologies and strategies

Despite these challenges, there are new technologies and strategies that offer hope for a more secure future. For example, artificial intelligence (AI) and machine learning (ML) can be used to detect and respond to cyber threats in real time. Blockchain technology has the potential to increase data security and privacy, while quantum computing may enable us to develop more secure encryption methods.

In addition, organizations are taking a more proactive approach to cyber security. This includes implementing security measures such as multi-factor authentication, training and awareness programs for employees, and continuous monitoring and testing of systems.

Summary

In conclusion, cyber security is a critical issue that affects all aspects of our lives. Cyber attacks have the potential to cause significant damage. However, there are new technologies and strategies that offer hope for a safer future. By working together, we can overcome cybersecurity challenges and build a safer, more protected digital world.