/HPE FRONTIER - The world's most powerful supercomputer.

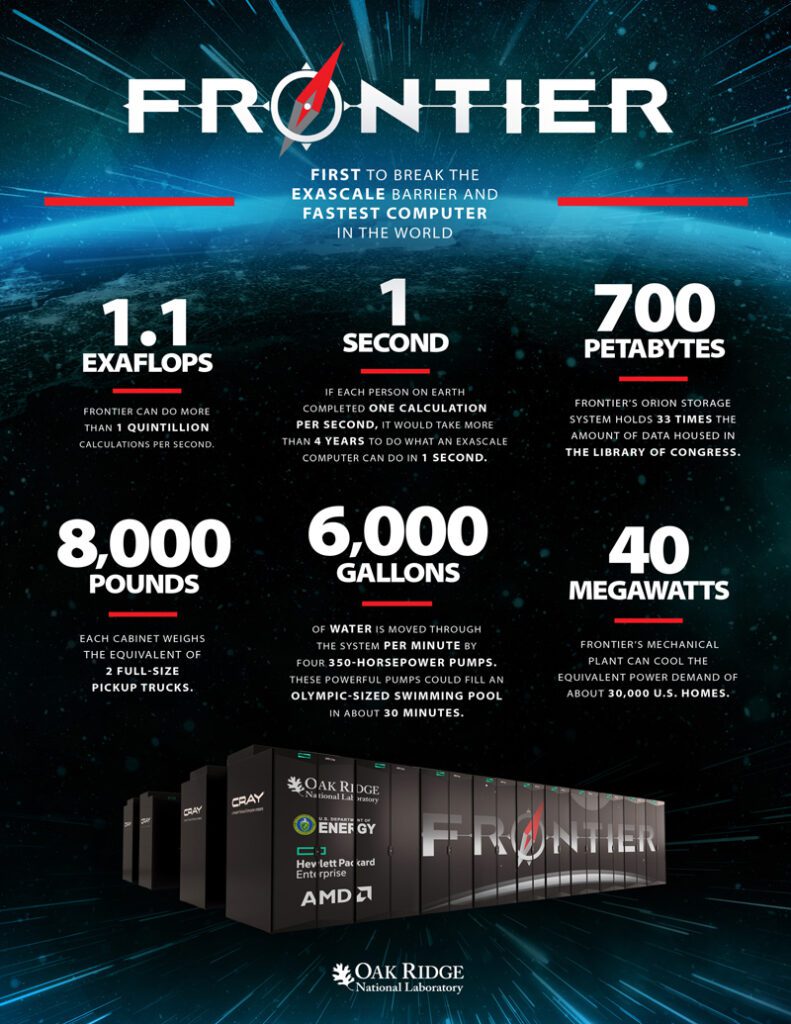

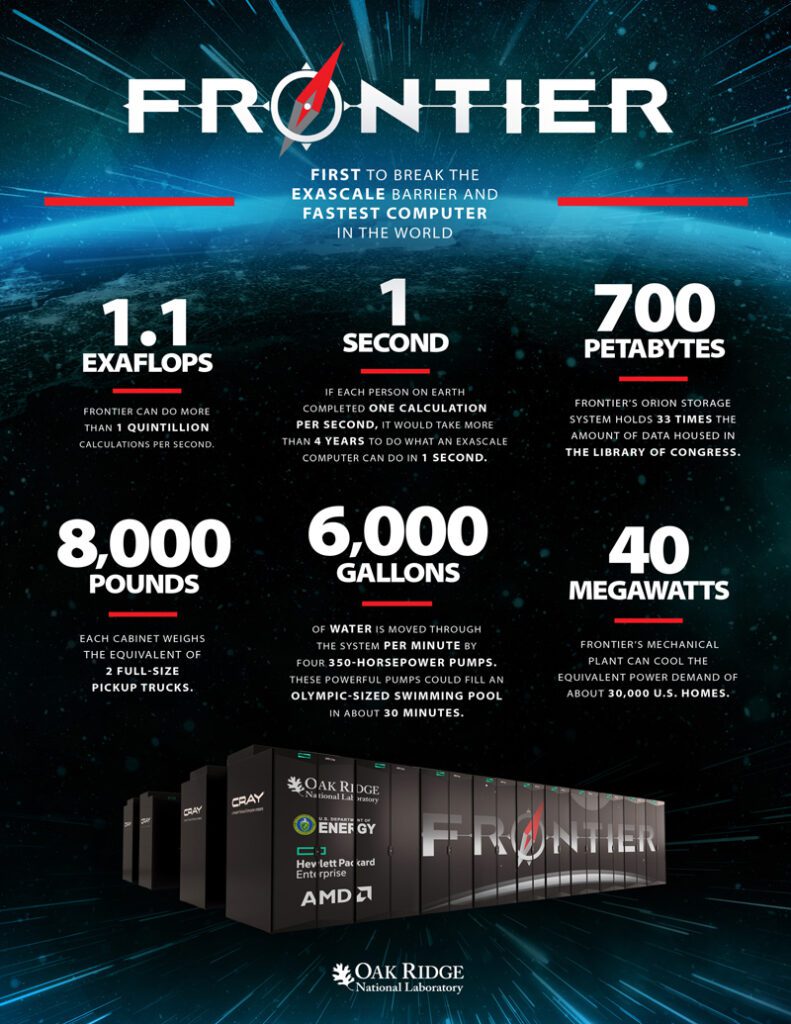

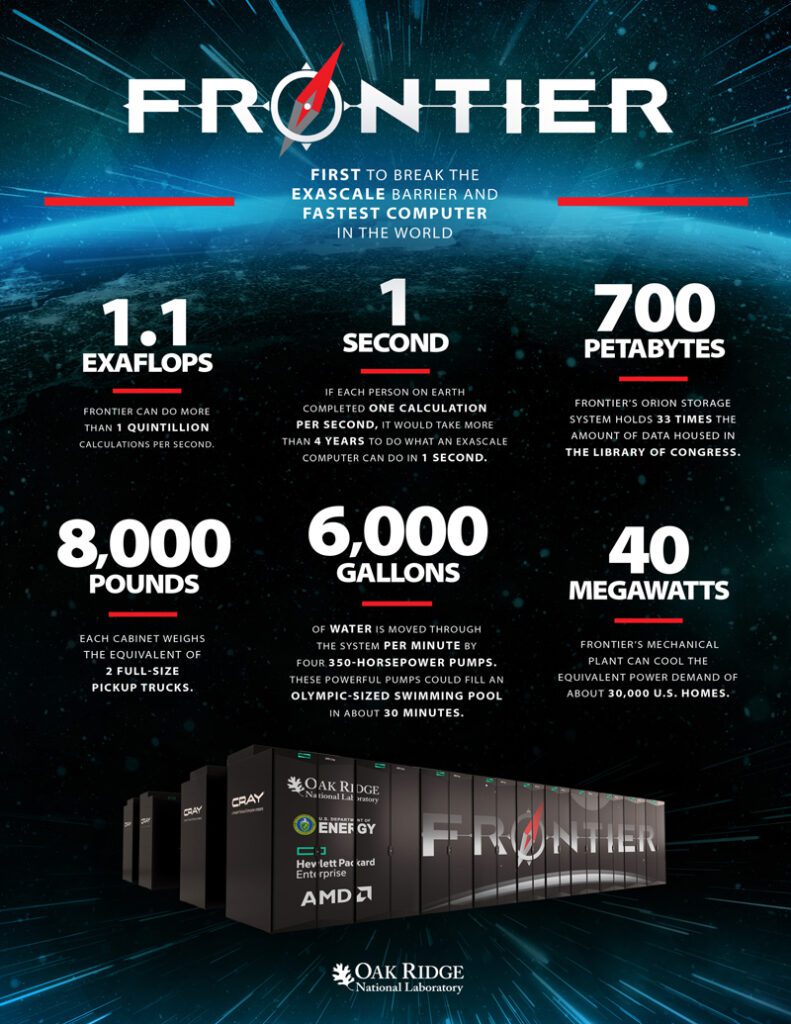

The Hewlett Packard Enterprise (HPE) Frontier supercomputer is one of the most powerful supercomputers in the world. It was developed in cooperation with the US Department of Energy (DOE) and is located at Oak Ridge National Laboratory in Tennessee, USA. The Frontier supercomputer was designed to help scientists solve the most complex and pressing problems in a variety of fields, including medicine, climate science and energy.

Tech specs

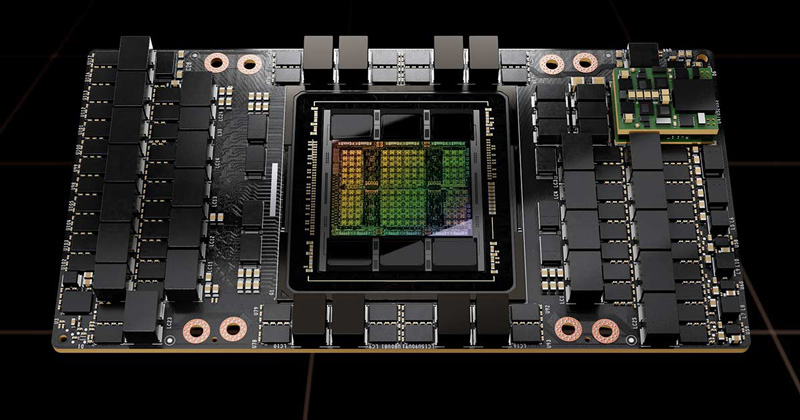

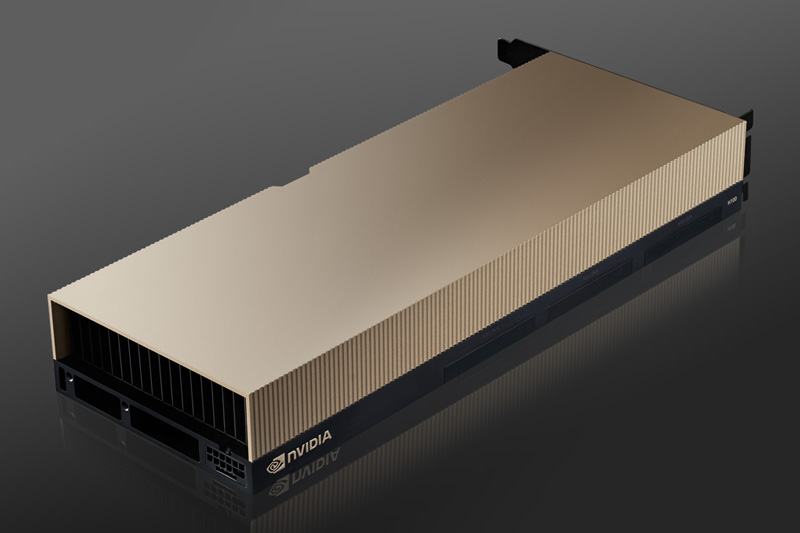

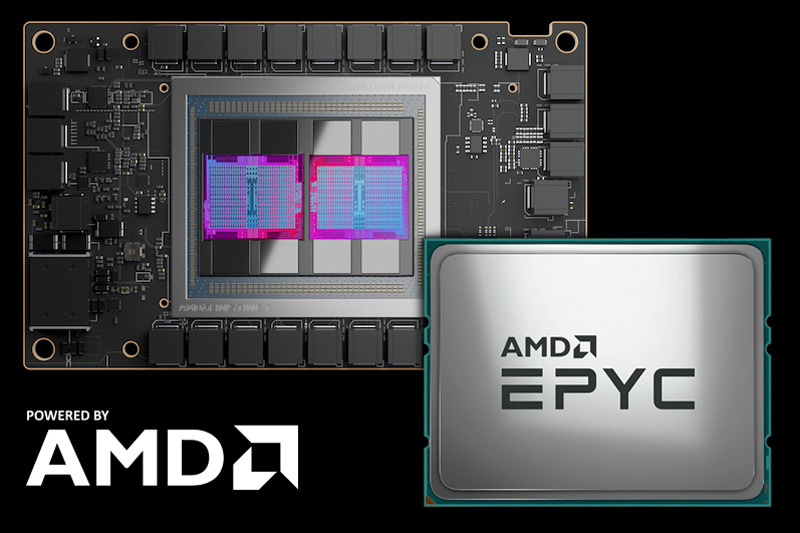

The HPE Frontier supercomputer is built on the HPE Cray EX supercomputer architecture, which consists of a combination of AMD EPYC processors and NVIDIA A100 GPUs. Its peak performance is 1.5 exaflops (one quintillion floating-point operations per second) and can perform more than 50,000 trillion calculations per second. The system has 100 petabytes of storage and can transfer data at up to 4.4 terabytes per second.

Applications

The HPE Frontier supercomputer is used for a wide range of applications, including climate modeling, materials science and astrophysics. It is also being used to develop new drugs and treatments for diseases such as cancer and COVID-19.

Climate modeling

The Frontier supercomputer is being used to improve our understanding of the Earth's climate system and to develop more accurate climate models. This will help scientists predict the impacts of climate change and develop mitigation strategies.

Development of materials

The supercomputer is also being used to model and simulate the behavior of materials at the atomic and molecular levels. This will help scientists develop new materials with unique properties, such as increased strength, durability and conductivity.

Astrophysics

The Frontier supercomputer is being used to simulate the behavior of the universe on a large scale, including the formation of galaxies and the evolution of black holes. This will help scientists better understand the nature of the universe and the forces that govern it.

Medical developments

The supercomputer is being used to simulate the behavior of biological molecules, such as proteins and enzymes, in order to develop new drugs and treatments for diseases. This will help scientists identify new targets for drug development and develop more effective treatments for a wide range of diseases.

Summary

The HPE Frontier supercomputer represents a major step forward in the development of high-performance computing. Its unprecedented computing power and storage capacity make it a valuable tool for researchers in many fields. Its ability to simulate complex systems at a high level of detail helps us better understand the world around us and develop solutions to some of the most pressing challenges facing humanity.