/NVIDIA H100 - Revolutionary graphics accelerator for high-performance computing

NVIDIA, a leading graphics processing unit (GPU) manufacturer, has unveiled the NVIDIA h100, a revolutionary GPU gas pedal designed for high-performance computing (HPC). This groundbreaking gas pedal is designed to meet the demands of the most demanding workloads in the fields of artificial intelligence (AI), machine learning (ML), data analytics and more.

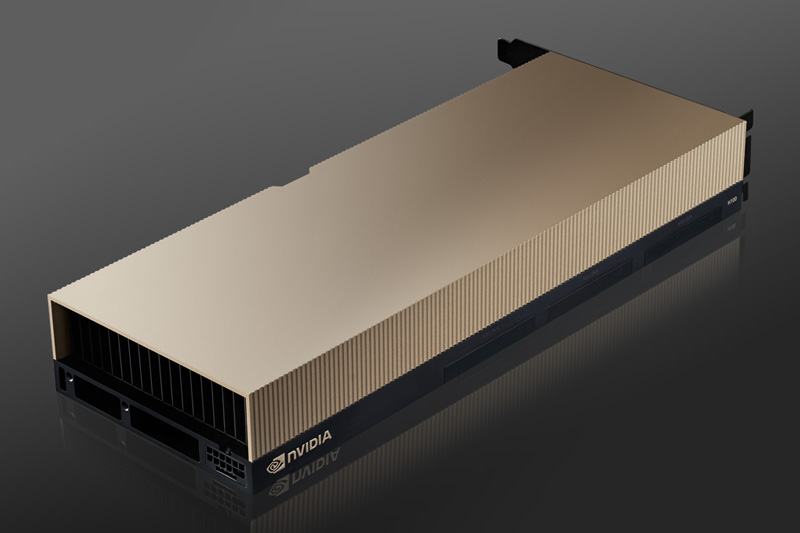

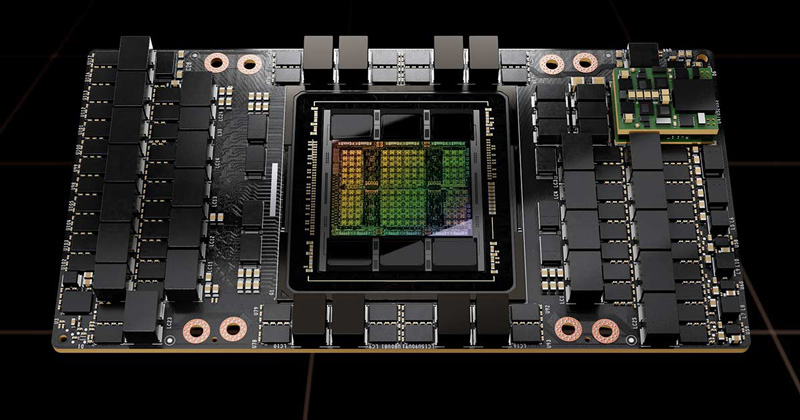

How it's built

NVIDIA h100 is a powerful GPU accelerator that is based on NVIDIA's Ampere architecture. It is designed to deliver unparalleled performance for HPC workloads and can support a wide range of applications, from deep learning to scientific simulation. The h100 is built on the NVIDIA A100 Tensor Core GPU, which is one of the most powerful GPUs available on the market today.

Unique features

The NVIDIA h100 is equipped with several features that set it apart from other GPU accelerators on the market. Some of the most notable features are:

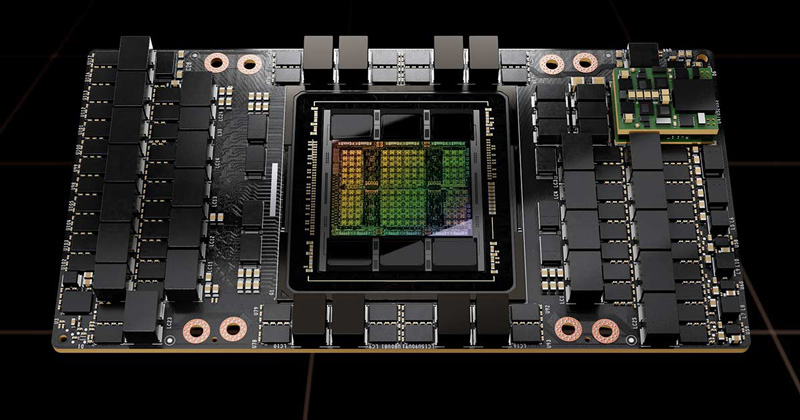

- High performance – The NVIDIA h100 is designed to deliver the highest level of performance for HPC workloads. It features 640 Tensor cores that offer up to 1.6 teraflops of performance in double precision mode and up to 3.2 teraflops of performance in single precision mode.

- Memory bandwidth – The H100 has 320 GB/s of memory bandwidth, allowing it to easily handle large data sets and complex calculations.

- NVLink – The H100 also supports NVIDIA's NVLink technology, which enables multiple GPUs to work together as a single unit. This enables faster data transfer and processing between GPUs, which can significantly increase performance in HPC workloads.

- Scalability – NVIDIA h100 is highly scalable and can be used in a wide variety of HPC applications. It can be deployed in both on-premises and cloud environments, making it a flexible solution for organizations of all sizes.

Comparisson

When comparing the NVIDIA h100 to other GPU gas pedals on the market, there are a few key differences to consider. Here is a brief comparison between the NVIDIA h100 gas pedal and some of its top competitors:

- NVIDIA H100 vs NVIDIA A100 - The NVIDIA H100 is built on the same architecture as the A100, but has twice the memory bandwidth and is optimized for HPC workloads.

- NVIDIA H100 vs AMD Instinct MI100 - The H100 outperforms the MI100 in terms of single precision performance, memory bandwidth and power efficiency.

- NVIDIA H100 vs. Intel Flex 170 – The H100 was designed specifically for HPC workloads, where Intel's Flex series is a more versatile gas pedal having weaker performance (80GB vs. 16GB of memory).

Summary

NVIDIA h100 is a powerful and versatile GPU accelerator that is designed for high-performance computing workloads. Its high performance, memory bandwidth and NVLink support make it an excellent choice for organizations that require superior computing power. Compared to top competitors, the h100 outperforms in HPC optimization and scalability, making it an ultimate accelerator.