/Virtual Machine - What Is It and Why Is It So Useful?

Many of today's cutting-edge technologies, such as cloud computing, edge computing and microservices, owe their origins to the concept of the virtual machine - the separation of operating systems and software instances from the underlying physical computer.

What is a virtual machine?

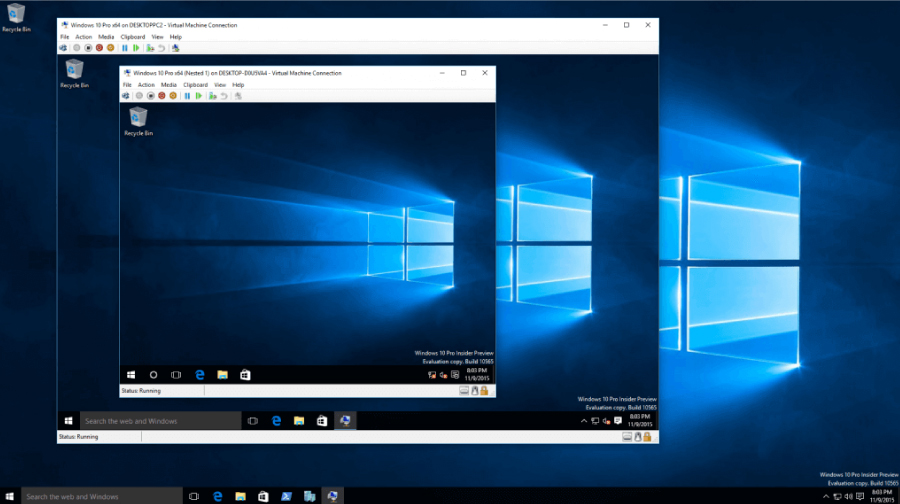

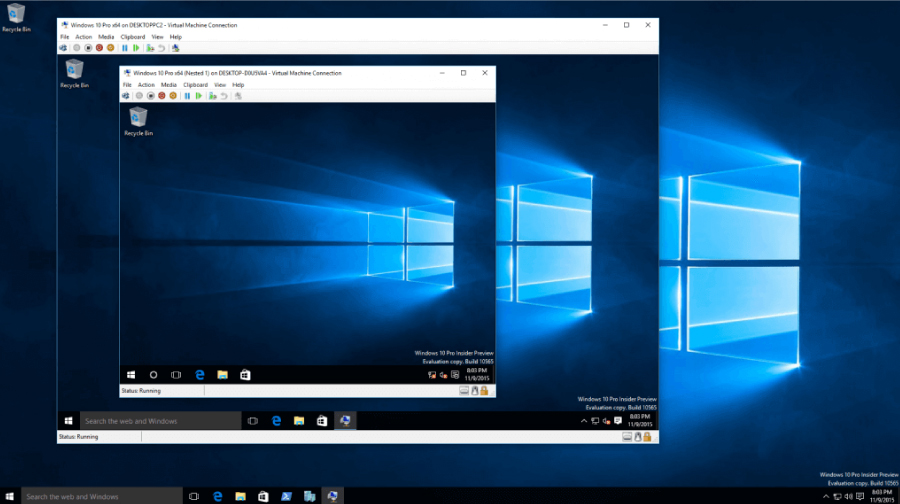

A virtual machine (VM) is software that runs programs or applications without being tied to a physical machine. A VM instance on a host computer can run one or more guest machines. Each VM has its own operating system and runs independently of other VMs, even if they are on the same physical host. VMs are typically run on servers, but they can also run on desktop systems and even embedded platforms. Multiple virtual machines can share resources with a physical host, including CPU cycles, network bandwidth and memory.

Virtual machines can be said to have originated at the dawn of computing in the 1960s, when time-sharing was used for mainframe users to separate software from the physical host system. A virtual machine was defined in the early 1970s as "an efficient, isolated duplicate of a real computer machine."

Virtual machines as we know them today gained popularity over the past 20 years as companies embraced server virtualization to use the processing power of physical servers more efficiently, reducing their number and saving space in the data center. Since applications with different system requirements could be run on a single physical host, no different server hardware was required for each application.

How do virtual machines work?

In general, there are two types of virtual machines: process virtual machines, which spin up a single process, and system virtual machines, which offer full separation of the operating system and applications from the physical computer. Examples of process virtual machines include the Java Virtual Machine, the .NET Framework and the Parrot virtual machine. System virtual machines rely on hypervisors as intermediaries that give software access to hardware resources. A hypervisor emulates a computer's CPU, memory, hard drive, network and other hardware resources, creating a pool of resources that can be allocated to individual virtual machines according to their specific requirements. Hypervisor can support multiple virtual hardware platforms that are isolated from each other, allowing virtual machines to run Linux and Windows Server operating systems on the same physical host.

Well-known hypervisor vendors include VMware (ESX/ESXi), Intel/Linux Foundation (Xen), Oracle (MV Server for SPARC and Oracle VM Server for x86) and Microsoft (Hyper-V). Desktop systems can also use virtual machines. An example would be a Mac user running a virtual instance of Windows on his physical Mac hardware.

What are the two types of hypervisors?

The hypervisor manages and allocates resources to virtual machines. In addition, it plans and adjusts how resources are distributed based on the configuration of the hypervisor and virtual machines, and can reallocate resources when demand changes. Most hypervisors fall into one of two categories:

- Type 1 - A bare-metal hypervisor runs directly on a physical host and has direct access to its hardware. Type-1 hypervisors typically run on servers and are considered more powerful and better performing than type-2 hypervisors, making them well suited for server, desktop and application virtualization. Examples of type 1 hypervisors include Microsoft Hyper-V and VMware ESXi.

- Type 2 - Sometimes called a hosted hypervisor, a Type 2 hypervisor is installed on top of the host machine's operating system, which manages connections to hardware resources. Type 2 hypervisors are typically deployed on end-user systems for specific use cases. For example, a developer might use a Type 2 hypervisor to create a specific environment for building an application, or a data analyst might use it to test an application in an isolated environment. Examples include VMware Workstation and Oracle VirtualBox.

What are the advantages of virtual machines?

Because the software is separate from the physical host computer, users can run multiple instances of the operating system on a single piece of hardware, saving the company time, management costs and physical space. Another advantage is that VMs can support older applications, reducing or eliminating the need and cost of migrating an older application to an updated or different operating system. In addition, developers use VMs to test applications in a secure, sandboxed environment. Developers who want to see if their applications will run on a new operating system can use VMs to test software instead of buying new hardware and operating system in advance. For example, Microsoft recently updated its free Windows virtual machines, which allow developers to download an evaluation virtual machine running Windows 11 to try out the operating system without having to upgrade the main computer. This can also help isolate malware that might infect a particular VM instance. Since the software in the virtual machine can't manipulate the main computer, the malware can't spread as much damage.

What are the disadvantages of virtual machines?

Virtual machines have several drawbacks. Running multiple VMs on a single physical host can result in unstable performance, especially if the infrastructure requirements for the application are not met. This also makes them less efficient compared to a physical computer in many cases. In addition, if a physical server fails, all applications running on it will stop working. Most IT stores use a balance between physical and virtual systems.

What are other forms of virtualization?

The success of virtual machines in server virtualization has led to the application of virtualization in other areas, such as storage, networking and desktops. If a certain type of hardware is used in the data center, it is possible to virtualize it (such as application delivery controllers). In terms of network virtualization, companies are exploring network-as-a-service options and network functions virtualization (NFV), which uses commodity-class servers to replace specialized network equipment to enable more flexible and scalable services. This differs somewhat from software-defined networking, which separates the network control plane from the data forwarding plane to enable more automated policy-based provisioning and management of network resources. The third technology, virtual network functions, are software-based services that can run in an NFV environment, including processes such as routing, firewall, load balancing, WAN acceleration and encryption.

Verizon, for example, uses NFV to provide its virtual network services, which enable customers to launch new services and capabilities on demand. Services include virtual applications, routing, software-defined WANs, WAN optimization and even Session Border Controller as a Service (SBCaaS) for centrally managing and securely deploying IP-based real-time services such as VoIP and unified communications.

Virtual machines and containers

The development of virtual machines has led to the further development of technologies such as containers, which represent the next step in the development of this concept and are gaining recognition among web application developers. In a container environment, a single application with its dependencies can be virtualized. With much less imposition than a virtual machine, a container contains only binaries, libraries and applications. While some believe that container development can kill a VM, there are enough capabilities and benefits of VMs to keep the technology going. For example, VMs remain useful when running multiple applications together or when running legacy applications on older operating systems. Also, according to some, containers are less secure than VM hypervisors because containers have only one operating system that applications share, while VMs can isolate the application and operating system. Gary Chen, research manager at IDC's Software-Defined Compute division, said the virtual machine software market remains a foundational technology, even as customers explore cloud and container architectures. "The virtual machine software market has been remarkably resilient and will continue to grow positively over the next five years, despite being highly mature and approaching saturation," Chen writes in IDC's study "Worldwide Virtual Machine Software Forecast, 2019-2022."

Virtual machines, 5G and edge computing

Virtual machines are seen as part of new technologies such as 5G and edge computing. For example, virtual desktop infrastructure (VDI) providers such as Microsoft, VMware and Citrix are looking for ways to extend their VDI systems to employees who now work from home under a post-COVID hybrid model. "With VDI, you need extremely low latency, because you're sending your keystrokes and mouse movements to essentially a remote desktop," - says Mahadev Satyanarayanan, a professor of computer science at Carnegie Mellon University. In 2009, Satyanarayanan wrote about how virtual machine-based clouds could be used to provide better processing capabilities to mobile devices at the edge of the Internet, which led to the development of edge computing. In the 5G wireless space, the network slicing process uses software-defined networks and NFV technologies to help install network functionality on virtual machines on a virtualized server to provide services that used to run only on proprietary hardware. Like many of the technologies in use today, these emerging innovations would not have emerged if not for the original virtual machine concepts introduced decades ago.

źródło: https://www.computerworld.pl